4 reasons why Web3 is a Critical Enabler for AI

Access control

4 min readWith the AI hype firmly re-invigorated, we want to point out how Web3 tech will be a ‘critical enabler’ for the future for both AI and Data.

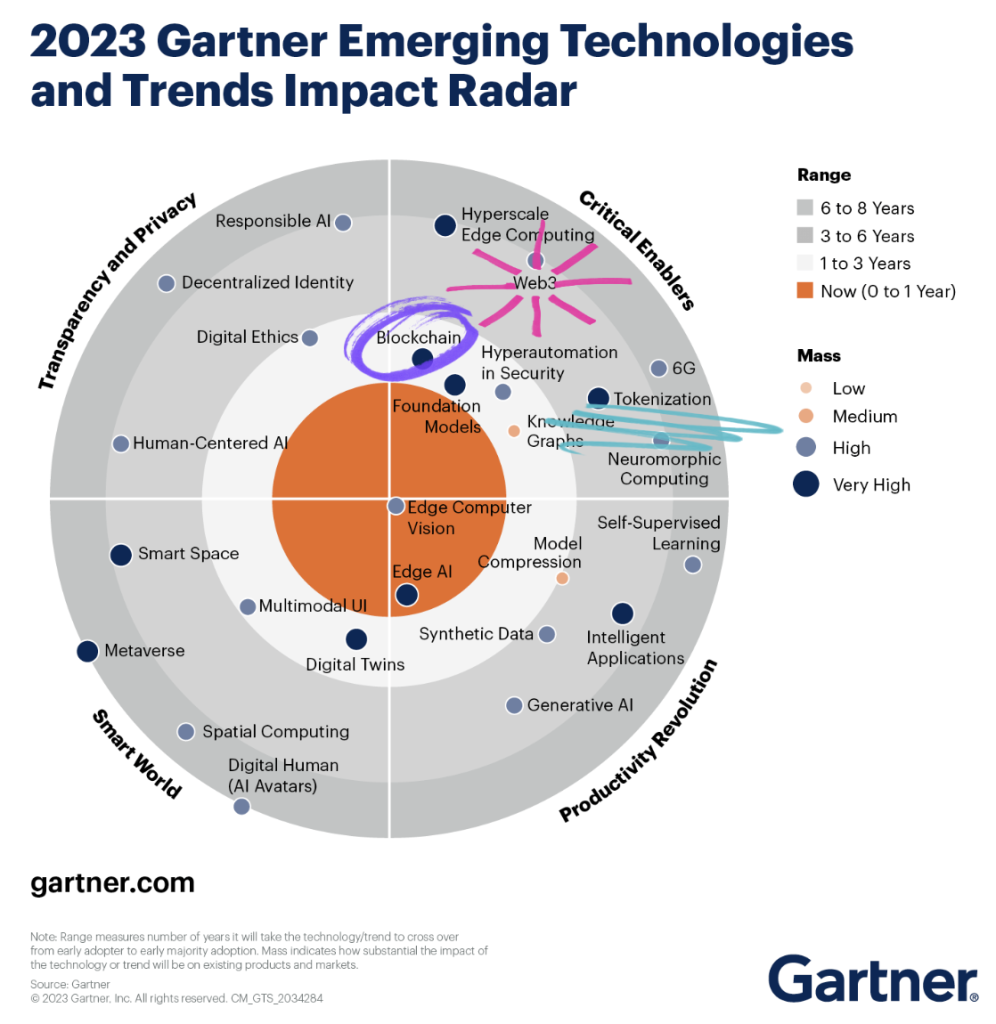

It’s not us saying this but Gartner, world’s leading tech research agency. When our CMO recently shared the Emerging Technologies and Trends Impact Radar from Gartner, we could hear a few cheers from the team. We literally felt the dots connecting, because this is why our team has been building Nevermined for the last few years.

Nevermined leverages Web3 tech to build open Data & AI tools. So, using the terminology mentioned in the Gartner visual, we’ll explain the 4 ways how the Data and AI space will benefit from Web3 technology.

1- Tokenization = Access to Data

One of Gartner’s senior AI analysts recently told us: “AI has a problem and that’s Data Availability.” And we couldn’t agree more. While the big Foundation Models feed on exabytes of publicly available data, the hyper precision of use-case-specific AI will come from training on precise but small data sets. However, the data required for these Intelligent Applications (see bottom right quadrant) typically sits in silos or behind walls.

✅ How we see Web3 as a Critical Enabler

Tokenizing Data means you can create on-chain Digital Identifiers and embed these with access conditions. Nevermined’s mission is to give Data Owners more control over access to their data, so AI will get a bigger and more relevant supply of data.

2- Tokenization = Bringing Compute to the Data

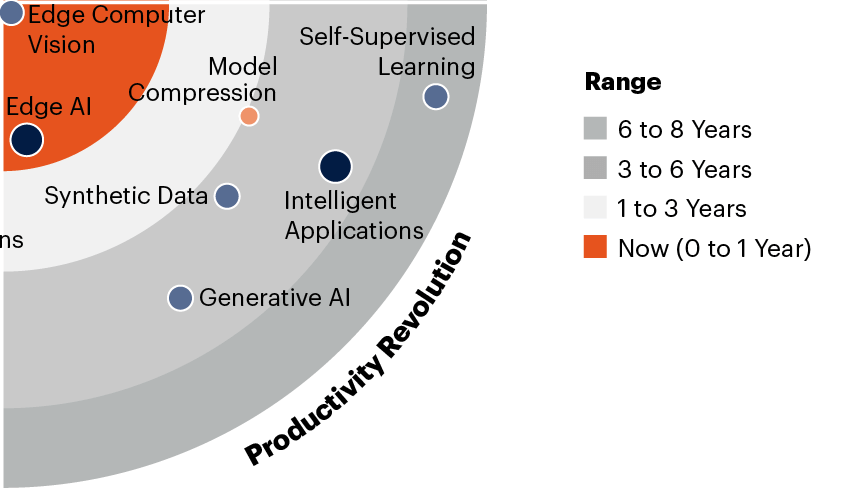

Gartner positions Edge AI in the orange area, which means that this technology is here or coming soon. Whether we call it Federated Learning, Remote Computing or Collaborative Computing (we’ve written about that before), the computing activity is moving away from a centralized server to the edge. Bringing the computation to the data and not the other way around is great, but yet again: how to execute your AI on walled data in a secure and privacy-preserving manner?

✅ How we see Web3 as a Critical Enabler for AI

Tokenizing access to data means that Data Owners can allow Data Consumers to set up local compute environments in situ, i.e. where the data resides. This means that AI scientists can train their AIs on relevant walled data without having to worry about security or privacy as their algorithms can’t actually ‘see’ the data and will only take away the insights.

3- Blockchain = Provenance

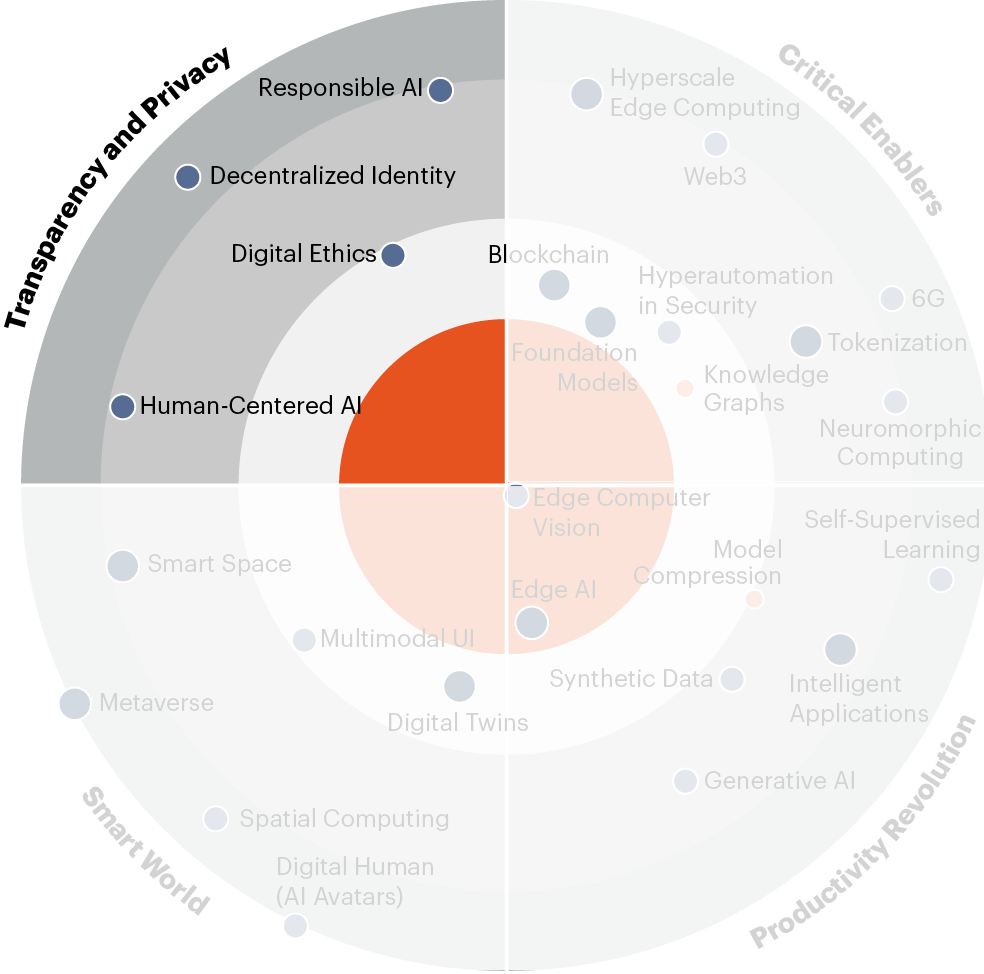

Look at what Gartner calls the top left quarter of their trend pie: ‘Transparency and Privacy’. They specifically single out ‘Responsible AI’, ‘Digital Ethics’ and ‘Human-Centered AI’. With AI being created and embraced by the big tech companies, it will be crucial to keep focusing on how data is being accessed, how it is being used and who it will eventually benefit.

✅ How we see Web3 as a Critical Enabler for AI

By tokenizing Data and AI, blockchains will register any interactions as well as any business logic encoded into smart contracts. This could be access conditions, pricing,… This creates a level of Provenance and auditability that will contribute to AI becoming more transparent and privacy-preserving.

4- Web3 = Ecosystem and Incentive Design

“Human-Centered AI assumes a partnership model of people and AI working together”, says the Gartner report.

Once again: so true. For AI to be beneficial to all, it will have to be developed in an open fashion. OpenAI may be Open in name, but, just like other big AI players, it is already being sued for alleged copyright infringement and training its models on scraped images, text and code. Data needs AI and AI needs Data, but the incentive models are currently not aligned nor easy to design and govern.

✅ How we see Web3 as a Critical Enabler for AI

We believe the killer application of Web3 is creating Digital Ecosystems. By applying Web3 tech and Web3 thinking to Data and AI, we can create Data Ecosystems with incentive structures that create mutual benefit.

Web3 = Wow3

We are very excited that the Gartner research validates all the premises we built Nevermined on. Their research identifies Blockchain, Web3 and Tokenization as Critical Enablers that create new business and monetization opportunities. The AI industry should rapidly familiarize itself with these components and understand the dynamics of the value creation this can bring.

In its simplest form, AI will pay for secure and traceable access to Data. More excitingly, this could come with attribution (AI x has used your data) and potentially lead to some kind of royalty. Plus, over time we will see the proliferation of Web3-style token economic models. AIs will be tokenized, open and remixable, possibly with its own AI-token to create value for Data Owners and AI Owners alike.